Data Warehousing with CQL

Traditional data warehouse projects often lead to failures that are difficult to predict and difficult to analyze. Conexus AI’s CQL enables early identification of such risks and provides a solution for high quality data integration, making it possible to simultaneously accelerate data warehousing projects and reduce costs.

While there are many systems for interacting with large volumes of data, traditional data warehouses are still necessary, especially when it comes to data that must be precise. This is often the case; whether the data warehousing project is for a financial or scientific institution, for client data or company data, data integrity is a top priority. In some situations, there are even laws governing data accuracy, privacy, and provenance. However, building a data warehouse with precision, accuracy, and verifiable correctness is a hard problem for any data team to solve. It is thus no surprise that traditional data warehouse projects are often over budget or do not achieve the desired result. Our research has shown that many of the problems were of the following nature:

- Missing data (e.g., NULLs)

- Schema and data mismatches (e.g., Pete vs. Peter)

- Problems uncovered too late to fix easily (e.g., after warehouse construction)

- Data integrity assumptions fail (e.g., social security number not a primary key)

The Problem with Traditional Data Integration

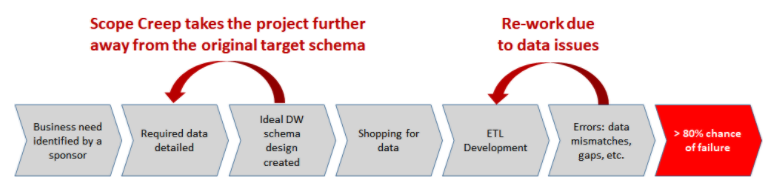

Among the multiple factors that contribute to data warehouse project failures, there are often tell-tale signs that appear early and are manageable if corrective measures are taken early. However, the traditional data warehouse project approach makes this early identification of risk nearly impossible, because users typically cannot find out what can go wrong during data integration until they actually integrate data. That integration effort involves an army of ETL developers trying to put the data together to meet the expected requirements of the target database, created prior to the full knowledge of the available data sources. The traditional warehouse project process is done backwards, because it starts with reporting requirements which in turn drive the warehouse schema design, which may or may not be constructible by the available data.

Doing Data Integration Forwards

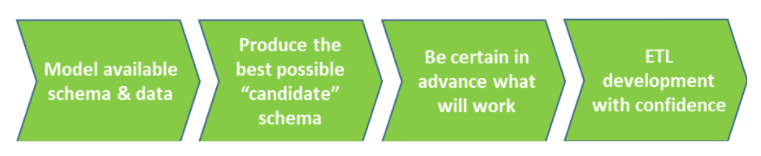

Now, imagine doing data integration forwards so that:

- Data risks are identified early, at compile/design time, before any data is moved around.

- Data integration is mathematically optimized so that reporting off the data warehouse is guaranteed to be as accurate as reporting from off data sources.

- Project risk and development time are minimized, and the process is more sustainable for projects that are still evolving.

Building a Data Warehouse with CQL

CQL provides that mathematical precision and predictability necessary for the source data model to be as accurate as possible. By using CQL to build a data warehouse, the process becomes more natural and deterministic. The basic steps are follows:

- Model the data sources in CQL.

- Provide CQL with information about how the source schemas are related. CQL provides compile-time validation.

- CQL computes the optimal integrated schema. At this point, an offline gap analysis can determine how to map the integrated schema to the target schema. Again, CQL provides compile-time validation.

- Model the desired target schema (what the business needs) in CQL.

- Provide CQL with information about how the source and the target data models are related. CQL provides both compile-time and run-time validation.

- Deploy the CQL program onto the desired back end, or execute it on the local machine, integrating data into the target database with confidence.

Additionally, as the project requirements evolve, the above steps can be repeated in an iterative manner. CQL modularizes development so that requirement changes to the target schema will not affect the integrated schema.

Schema Integration

In CQL, the integrated schema is computed using the mathematical operations of disjoint union and quotient. This process takes as input equations relating the tables and columns of the input schemas and as output provides what is called colimit schema.

Unlike traditional ETL tools, the relations between columns in CQL need not be simple correspondences. It can involve complex relationships that more closely represent the business rules embedded in the underlying data.

CQL models and integrates not just individual tables and columns, but entire schemas, including all known data integrity constraints.

Data Integration

Next, landing the source data onto the integrated schema is fully automated using CQL’s sigma operation, which maps each data source onto the integrated schema individually, followed by the merging of the disjoint union of those databases.

Again, CQL considers all the equations specified in the initial model and in the integrated schema to ensure that the result is mathematically correct.

Migrating to the Target Schema

Finally, the target schema defined by the business requirements for the data warehouse is populated with confidence, because all of the mappings and queries were validated in advance.

Iterating

Because CQL applies mathematical rigor to all of the steps, it also keeps track of the history of the schema and data within the scope of its operations. The result is that incremental changes to both the source and the target schemas can be handled easily by CQL.

Conclusion

Traditional data warehouse projects have typically used the approach of starting with business requirements and then trying to build a data warehouse that meets them without validating that the necessary data even exists or that they will fit together. This process often results in unmet requirements that cannot be discovered until well into the implementation effort, resulting in costly rework or a failure. Although the idea of building a data warehouse by starting with knowledge of source data may not be new, the technology to deliver on this idea is—CQL’s mathematical certainty and precision provides the first tool of its kind.

CQL is not a database and it is not a server. It is a functional programming language and a dedicated IDE for ETL tasks. It provides the means to execute all the data integration tasks necessary to create a precision data warehouse, where critical schema and data mappings are validated by the rigor of mathematics at both compile time and run time, thereby reducing risk, improving implementation speed, and reducing cost.

About Conexus

CONEXUS IS THE WORLD’S ONLY VERIFIABLE DATA INTEGRATION TECHNOLOGY. Conexus CQL drives real-time inter- and intra-enterprise scaled data integration at the pace of data growth and business evolution. Conexus: evolve gracefully.