New Capabilities for New Challenges

Abstract

Conexus is interested in working with selected health care researchers that want to apply our expertise in Applied Category Theory to high-impact projects related to the COVID-19 Public Health Emergency. This brief note is to present how Conexus might assist enterprises in their responses.

Conexus’ Enterprise Category Theory uses methods and software tools to work on intractable problems and expedite results. Here we discuss how our techniques can be exploited to work on supporting research processes, data cleanup and preparation, and protecting data fidelity during transfers between applications.

Supporting Research Process

Driving for results in high risk, high reward, and high pressure environments forces dependence on good process methodology and process organization. Good process methodologies use tested and proven sequences of steps to ensure that a result of known quality is achieved (even if a failure). Good process organization enables application of resources to perform the process tasks to achieve specific objectives and use resources efficiently. For example, more resources at the wrong stage does not make a process complete faster – instead slowdown occurs (Brooks’ Law). Good organization enables resources to be used to complete some tasks more rapidly (such as running multiple parallel tests), or more efficiently (using automated regression and functional testing).

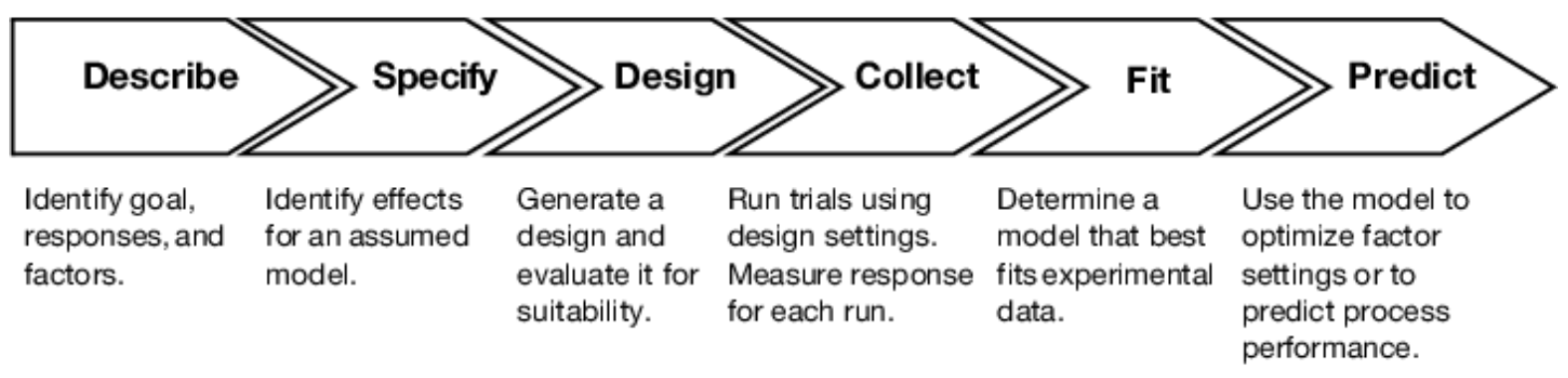

In this discussion, consider the Design of Experiment (DoE) process that is widely used including in the health care field. Experimental design is widely discussed at many levels and perspectives. [Conf01], [Gold02] Design of Experiment as a process is widely discussed as well:

“As per available reports about 28 relevant journals, 25 Conferences, 45 workshops are presently dedicated exclusively to Design of experiment and about 2,070 articles are being published on Design of experiment” [Conf03]

The Design of Experiment uses a rigorous mathematical and statistical approach to developing the capture the characteristics of the subject population, measurements, and development of modeling from the data to use in evaluating the experimental process and analysis of results.

A complicating factor in the development of any experimental design is the embedded data design that encodes the data model (organization), structure (relationships), and elements (values stored). The implementation of the data design by programmed software tools and, often, custom computer software is also subject to design flaws and implementation miscues that can impair the utility and analysis of the data subject of the Design of Experiment.

Enterprise Category Theory seeks to reduce the occurrence of design and implementation introduction of possible faults. EntCT does this by focusing on the data throughout the computer application’ process with particular attention to process needs. For Design of Experiment EntCT accomplishes this by the application of tools and spot tasks. These tasks add rigor to the Design of Experiment mapping from the statistical experimental design to development of the data models that support the experimental model. EntCT techniques introduce tasks to protect data fidelity across transcriptions and transformations, map data interfaces and data models into specific access coding, and providing an audit method for data.

In summary, Conexus applied EntCT to provide a rigorous support for Design of Experiment process steps to improve the alignment of data model, experimental design, and experimental model.

Supporting Novel Epidemiological Tracing

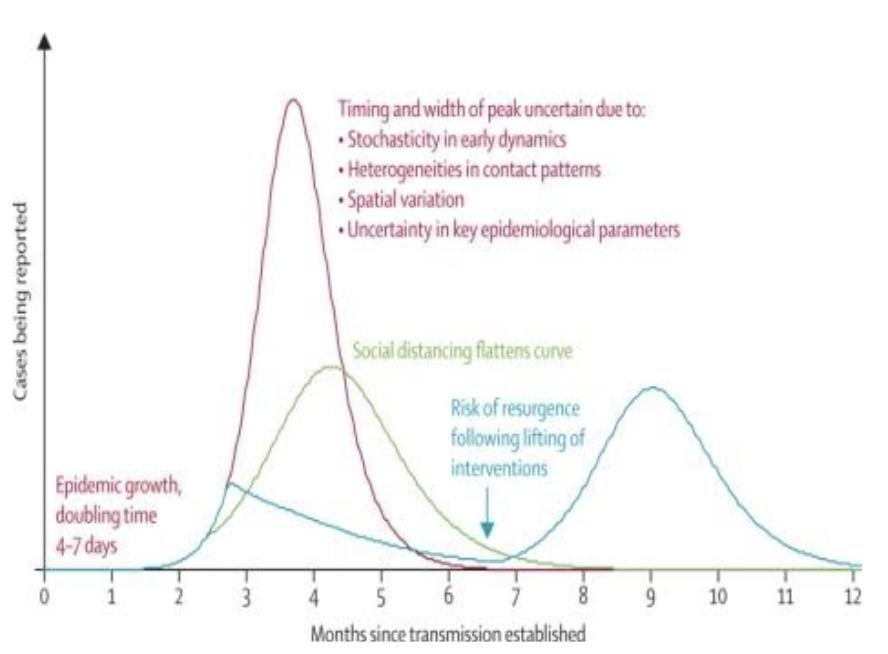

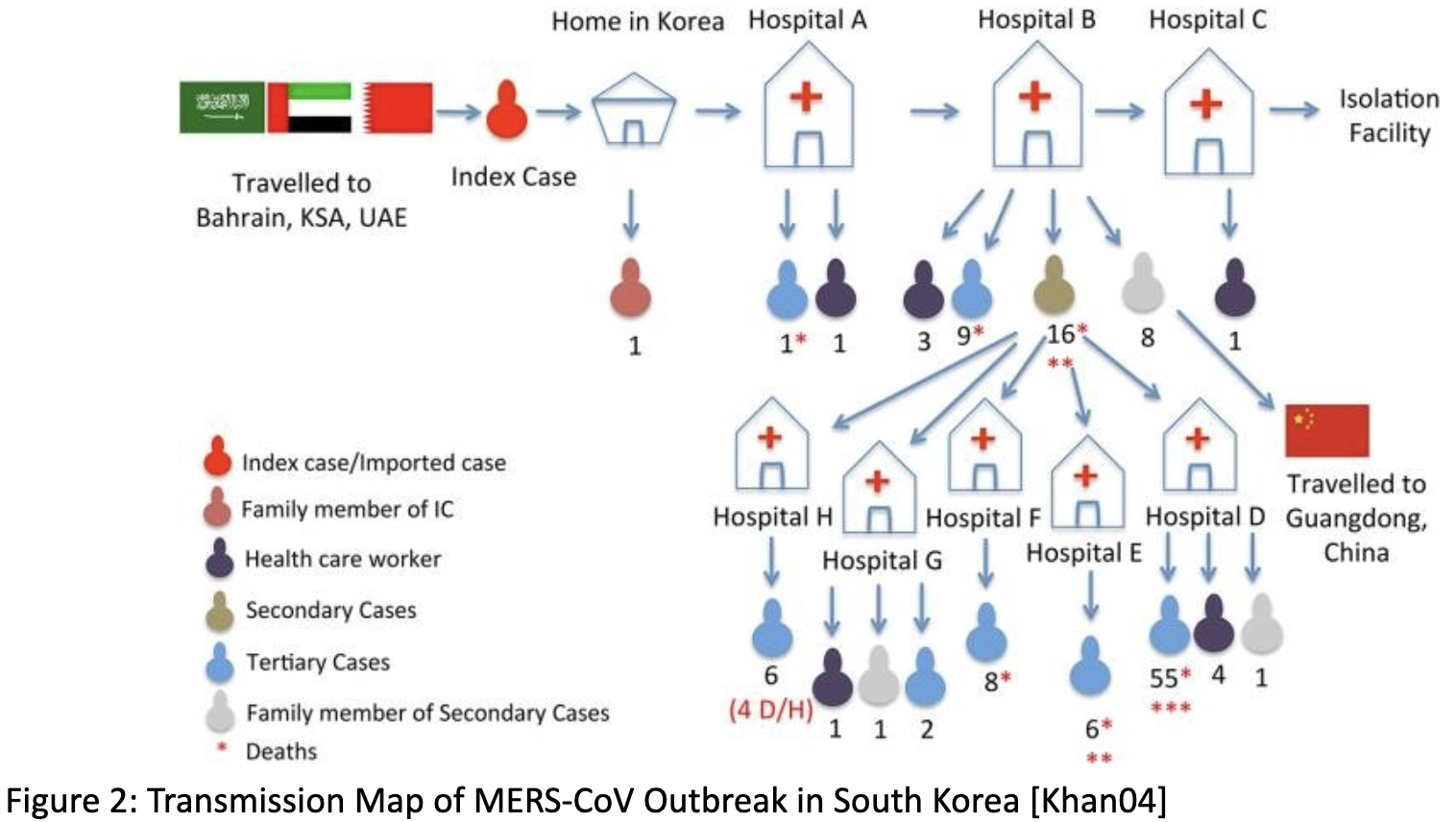

The Republic of Korea’s Center for Disease Control (KCDC) learned key lessons from a 2015 outbreak of the coronavirus MERS-CoV. [Kahn04] In that outbreak 168 cases of the coronavirus MERS-CoV were eventually traced to a single individual who brought it back from a trip to the Middle East. Also possible from that outbreak was the continued spread to additional countries.

In response to that incident new epidemiological tracing processes were created and international discussion of possible process improvements on a global scale occurred. [Flah05] These discussions included mooted use of additional digital technology approaches to performing time-critical tracing during large scale outbreaks. Presciently, the possible pandemic occurrence of a coronavirus was specifically considered in June 2019:

“The greatest threat of airborne viruses is due to the lack of measures to control airborne spread. Examples from the recent past highlight the pandemic potential of these viruses: the outbreaks of SARS-CoV in 2002/2003, the pandemic of influenza A/(H1N1)pdm09 in 2009 and the pandemic of MERS-CoV in 2012 with an unexpected international spread and a mortality rate of 40% (1). When a new respiratory pathogen emerges, immediate responses include increased surveillance, diagnostic assays to identify cases and ad-hoc mitigation strategies, like quarantining diseased persons. In the SARS-CoV outbreak, these measures eliminated the virus from the human population, but in the pandemic influenza A/(H1N1)pdm09, mitigation strategies had limited success and the virus quickly circulated around the globe. We are unlikely to ever been able to eradicate such viruses for which the human and animal reservoir is enormous. Therefore, continuing surveillance for local and imported cases and putative changes in virulence is essential.” [Flah05]

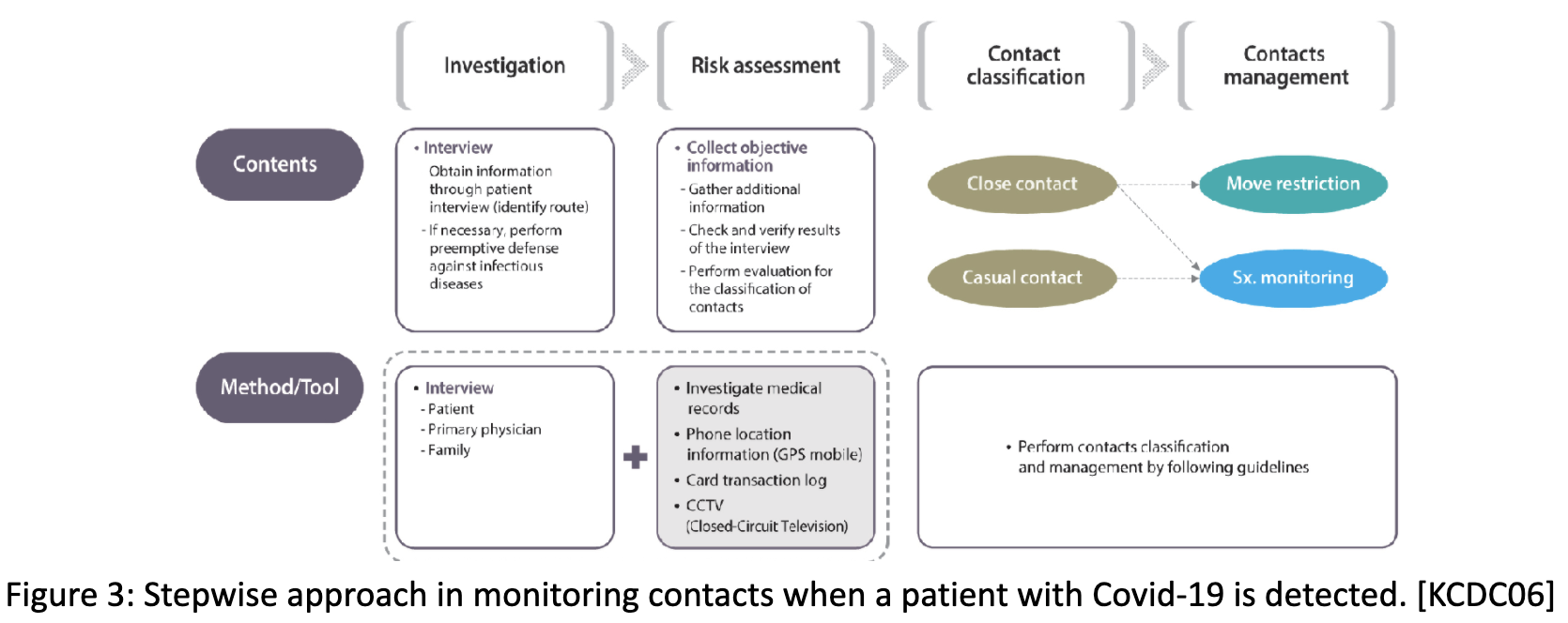

In Korea epidemiological policy, process, and procedures improved by incorporating availability of unstructured ‘big data’ sources into methods used by investigators.

The process incorporates integration of rapid (although investigator time consuming) analysis of available electronic health records (EHR), use of geotracking for risk assessment and possible contact evaluation, and risk management by use of electronic/automated movement tracking and remote interactions. For even a moderate population this integration of large scale structured, and unstructured, data presents significant problems and resource requirements to perform the desired analysis in a timely fashion that meets the needs of governance, policy makers, and health delivery systems.

For the population of Covid-19 patients the Korean experience has been held up and applauded as extraordinary in a time of new challenges. This reports a tracing of a population of 8,320 cases with 81 deaths, and 1,401 cases discharged from isolation. [KCDC07] The epidemiological tracing found links in 80.6% of cases with the other 19.4% under continuing investigation. In addition to addressing the needs of the governance and policy makers the KCDC teams have reported results:

“The use of methods that have objectively verified the patient’s route claims (medical facility records, GPS, card transactions, and CCTV) for COVID-19 contact investigations in South Korea has provided accurate information on the location, and time of exposure, and details of the situation, thus reducing omissions in a patient’s route due to recall or confirmation bias that may have arisen from patient or proxy interviews.”

The KCDC teams were acting under the norms of an open democratic society and were conscious of other limitations of their methods, and they documented these as:

“However, the publicization of a patient’s route for the public’s benefit infringes upon the privacy of the patient. Thus, it is necessary to establish a protocol to protect privacy. Equally, more effort should be taken to provide accurate information to the public. Patient information that is unrelated to the communication of risk of infectious diseases must be protected by clearly defining the standards of publicization and reporting of patient information for the public’s benefit when an infectious disease is prevalent.” [KCDC07]

Limitations in a digitally-assisted tracing processes have also become apparent. These limitations are common with analytical and operational uses of high volume data feeds from sources such as 4G/5G smart devices, CCTV video, and financial transaction network data. Specific issues common to the analysis of these domains of data include:

- Failure to process unstructured data (video, location data, social media feeds) in a timely fashion

- Requirements to discriminate and discard ‘noise’ (unwanted or irrelevant data), ‘dirty’ (corrupted), and ‘invalid’ (not in a time range under consideration)

- Difficulty in translation and interpretation of complex data (electronic or manual medical records including prescription data, laboratory diagnostic results, and notes from medical personnel (including dictation)

- Inability to apply data techniques to discern, infer, or continue to function with missing data

- Requirements to respect legal, regulatory, and cultural restrictions on data (such as privacy, surveillance, and association)

The KCDC experience also lists key objectives of the epidemiological tracing process that address multiple stakeholders requirements and need for information:

- collaboration of various related persons,

- a strict verification process,

- using systematic processes,

- scientific methods and principles,

- cycles of continuous evaluation and feedback,

- for the infectious disease: identify the etiology, extent, progression and therapy, and

- develop public health policies [From KCDC07]

Each of these decompose into tasks and methods that are driven by needs for high fidelity data, timely analytics, and high confidence results delivered to stakeholders. The lessons being learned globally from these new challenges call for additional new capabilities. The new capabilities will support the appropriate rigor, process agility, and high confidence needed to deliver useful information and direct governance actions on a timely basis.

Operational conduct of epidemiological tracing, delivery of therapeutic health care, development and implementation of health policy all require a level of public transparency. The additional conduct of operations to publicize, educate, and inform the populace about an outbreak also require that the data be in a format that communicates. Persuasive technologies and the dynamics of social media must be able to provide clear information with transparent depths of details that create public confidence. [Orji08]

Conexus proposes to use Enterprise Category Theory to directly integrate the tasks into data design, process design, systems implementation and deployment, and operational protocols that provide mathematically rigorous supports for processes and people. Our belief is that providing the processed results and confidence in the data will aid the societal processes of policy development and good governance in response to new challenges.

Data Cleanup and Preparation

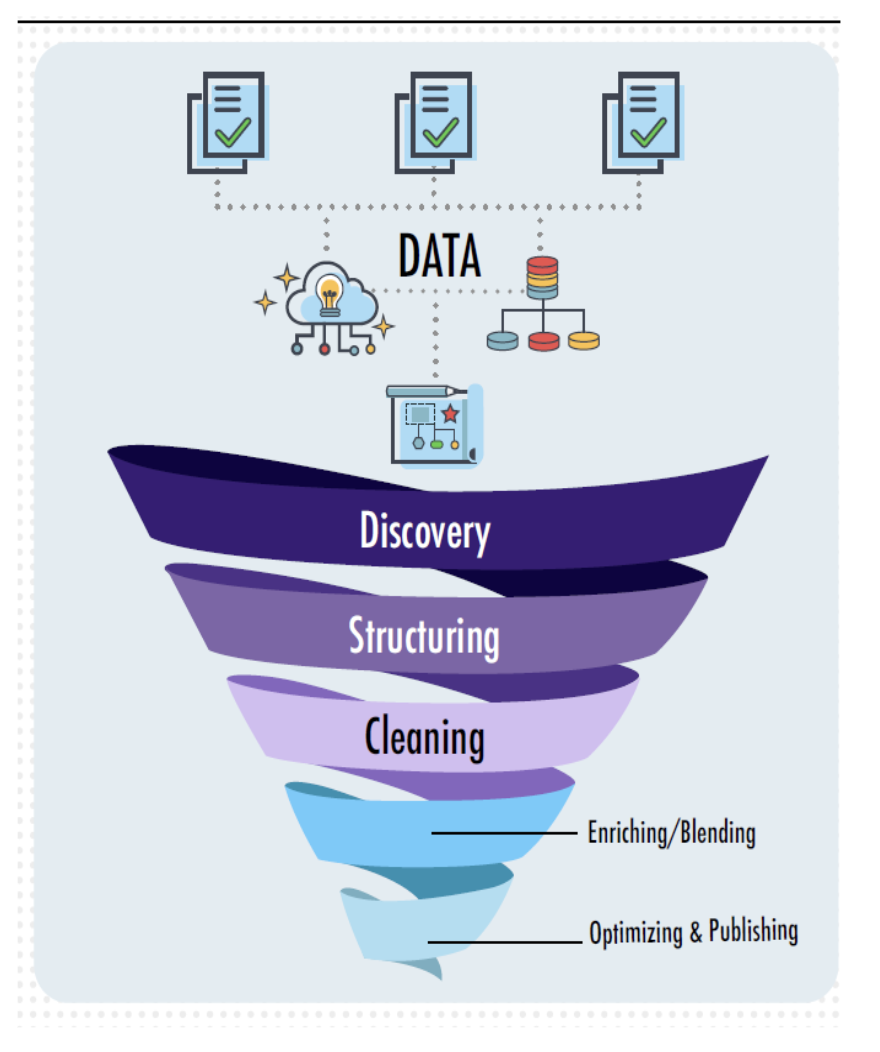

Data science analytics, artificial intelligence, and machine learning projects are widely held to have a cumulative failure rate approaching 85% in the enterprise IT community. Many of these failures to reach the lofty goals at project starts are due to a failure to clean and prepare data properly for the processing that follows. Estimates of the time to cleanup and prepare data run to 65-85% of the elapsed time and resources in data dependent projects.

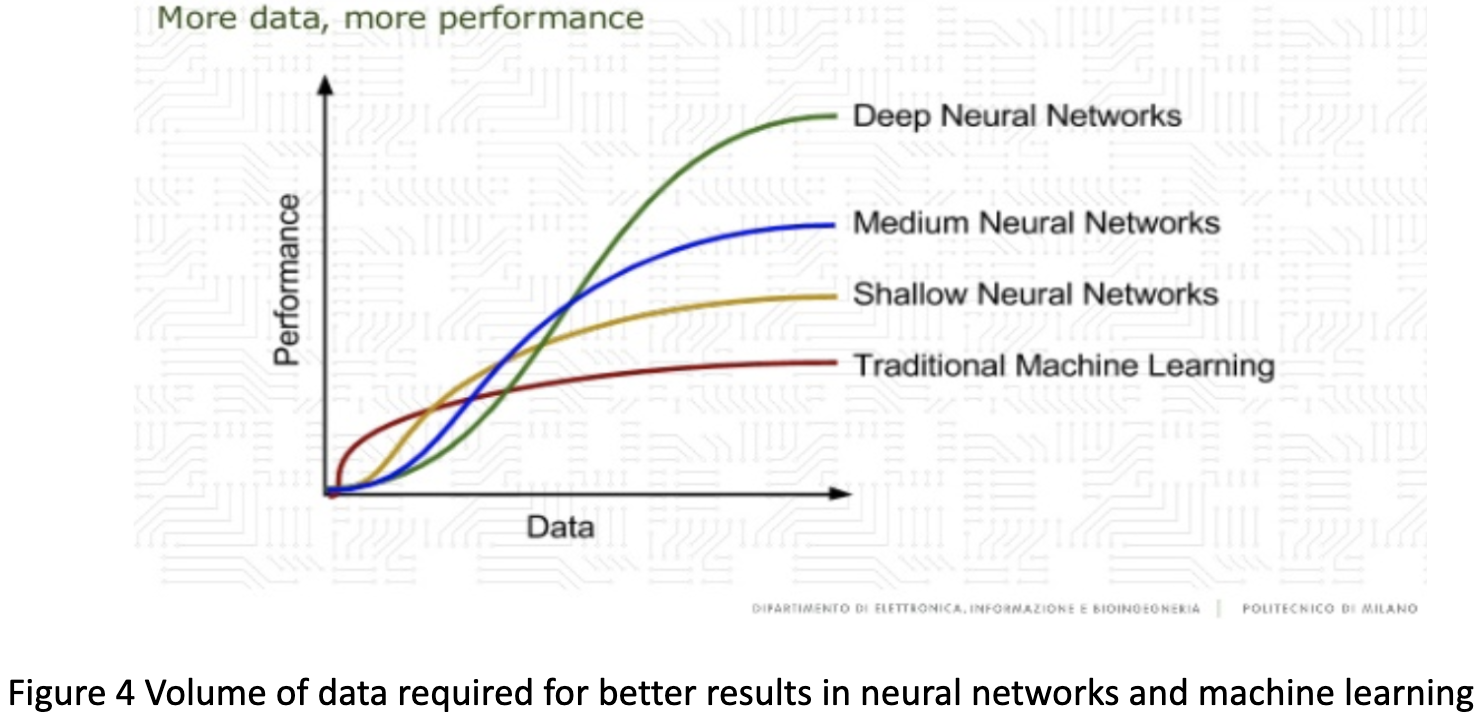

An additional complication is the recognition amongst practitioners that the level of data used to develop, test, and build confidence in complex applications climbs with the complexity of the domain of knowledge. These volumes climb non-linearly such as:

There are many methods of developing, implementing, and operating the processing tasks to clean and prepare data. The costs and difficulties of doing these functions are considered ‘challenging’ by 76% of financial industry specialists surveyed. [Tran09]

In domains where the volumes of possible data expand rapidly (such as location data from smart devices, tracking data for individuals using CCTV video, or unstructured data streams such as social media, medical records with diagnostic and therapeutic data) the sheer volume becomes its own difficulty. Operating on metadata, aggregated data, or pattern-analysis based profiles reduces the load and is one of the essential techniques to make timely analytics and reporting possible.

Ad-hoc applications by their nature have difficulties implementing robust an rigorous data handling with bad treatment at a detail level for metadata, master data management, and providing data provenance and data lineage. The acceptance of purely ad-hoc application limitations also obscures real limitations in the capability to establish confidence in results, provide clean and prepared data to downstream uses, and provide chaotic data models as ad-hoc applications are forced to adapt and evolve to meet clarified requirements and usability needs.

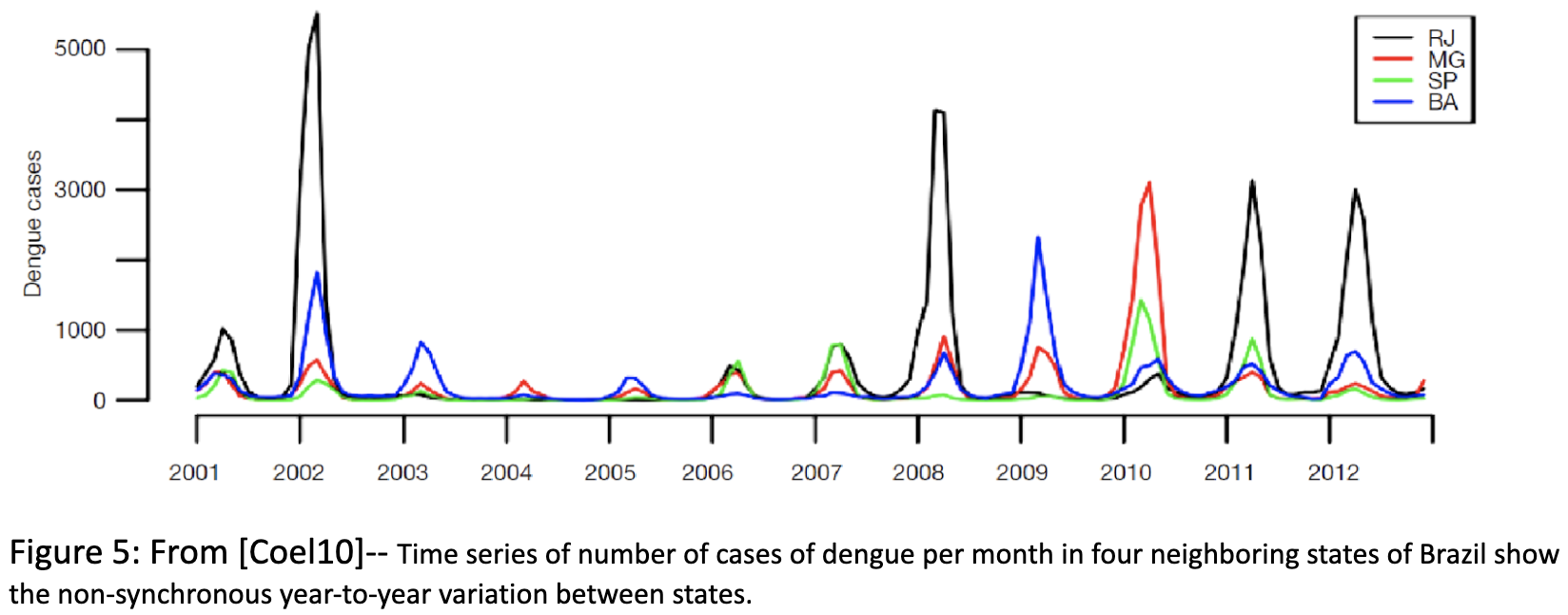

Often ad-hoc applications need to integrate data from existing data silos, feed data to other applications for presentation or delivery, or build history to look for non-obvious patterns (such as has emerged for dengue fever [Coel10]). Connecting data sources and destinations often cause faults that manifest the lack of rigor in protecting each side from the lack of data quality in implementing each application.

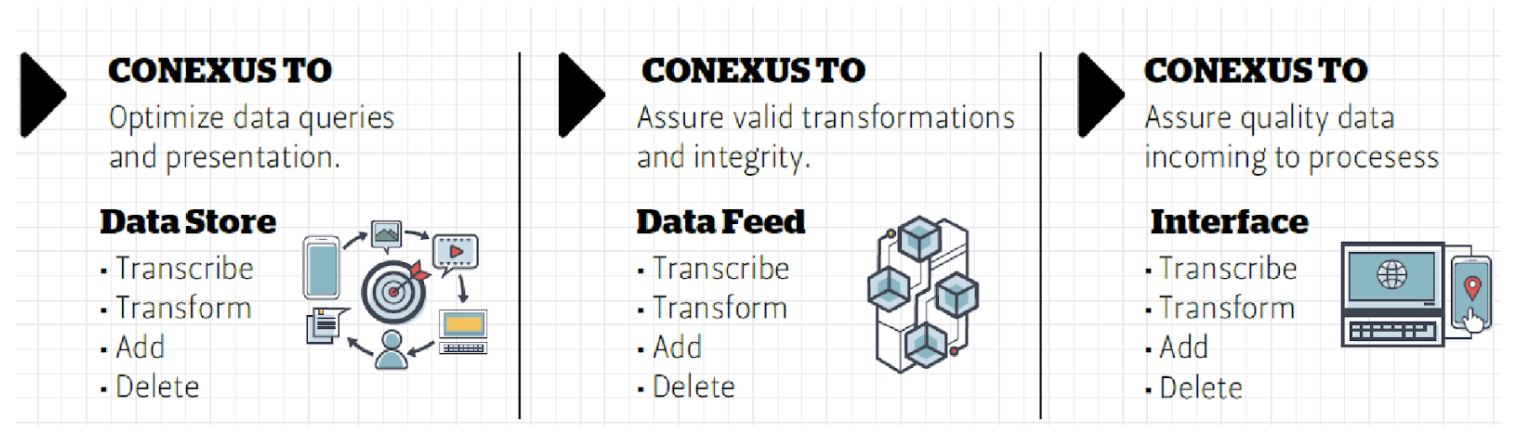

Conexus provide capabilities to improve data models, protect data fidelity, and improve confidence in results by cleaning and preparing data for further processing. These are in methodological approaches from EntCT, embedded knowledge in our software tools, and from the consistent application of data constraints throughout data processing.

Conexus provides methods and software tools to be applied at different stages of the cleaning and preparing of data. From methodological steps that identify data flows, data interfaces, and transformations; to process intensive definitions of transcription and transformational logic, and resulting in providing confidence by support data fidelity Enterprise Category Theory provides assists for designers, developers, implementers, and operators of applications to meet new challenges.

Conclusions

New challenges demand new capabilities across the computing systems response to national emergencies. Putting aside the obvious answer to “Why Now?” Conexus believes that we have contributions of value for the research and analysis teams that are tasked to save lives, rebuild economies, and build popular confidence in this time of new challenges.

References

[Conf01] International Conference on Design of Experiments CODOE 2019, https://www.memphis.edu/msci/icodoe2019/booklet.pdf

[Gold02] Uhoraningoga, A. et al. “The Goldilocks Approach: A Review of Employing Design of Experiments in Prokaryotic Recombinant Protein Production”, Bioengineering, V5N4, https://www.mdpi.com/2306-5354/5/4/89

[Conf03] ConferenceSeries.com. “International Conferences”, https://www.conferenceseries.com/design-of-experiment.php

[Kahn04] Kahn, A., et al. “Lessons to learn from MERS-CoV outbreak in South Korea”, Journal of Infection in Developing Countries 9(6):543-546, June 2015, DOI: 10.3855/jidc.7278

[Flah05] Flahoughty, A., et al. “Infectious disease epidemiology at the digital era in a global Context”, J Public Health Emerg 2019, June 2019, DOI: 10.21037/jphe.2019.05.01

[KCDC06] Korean Centers for Disease Control and Prevention, Press Release, “Updates on COVID-19 in Republic of Korea (as of 17 March)”, https://www.cdc.go.kr/board/board.es?mid=a30402000000&bid=0030&act=view&list_no=366578&tag=&nPage=1

[KCDC07] Korean Centers for Disease Control and Prevention, COVID-19 National Emergency Response Center, Epidemiology & Case Management Team. “Contact Transmission of COVID-19 in South Korea: Novel Investigation Techniques for Tracing Contacts”, Osong Public Health Res Perspect 2020;11(1):60-63

[Orji08] Orji, R., et al. “Persuasive technology for health and wellness: State-of-the-art and emerging trends”, Health Informatics Journal 2018, Vol. 24(1) 66–91 DOI: 10.1177/1460458216650979

[Tran09] TransUnion. “Survey of Financial Industry Executives”, 2019

[Coel10] Coelho, F.C., et al. “Precision epidemiology of arboviral diseases”, J Pub Health Emerg, V2019;3:1